User:Easyhead

ADempiere

MY PERSONAL DETAILS

((¯`·-»easyhead«-·´¯))

Hello2, My friend call me izi, so now i want to write story of ma life =), was born in 1985 which makes me the right age to have lived through the 1990s and to remember it :p .I lived in the town of KUALA TERENGGANU currently in MALAYSIA. For now im working with RED PEAR it's the IT Company and hardly working for Mr Red1. I'm is a person who care's about my works but sadly, I'm too lazy to like the effort involved in writing "big" articles. However I do sometimes forget myself and write a lot when I find a subject that I really like. :))

- Now i'm gonna show my flow project source system.

Strange But True

It is possible to edit nearly every day for newbie on ADempiere/Wikipedia and still be an unknown to other contributors.

Replication Overview

- Replication is a process of copying the exact attribute of an object or instance. Data replication is a process of sharing information by copying the one data from one node to another and at the end all nodes will receive and store the same data from its origin. Data replication is used to ensure the consistency between redundant resources, to improve reliability/accessibility.

- Data replication is nowadays trend for business especially a worldwide company where it have many franchise company for all over the world. Reliable, Availability and consistency are 3 strong words which keep this company survive in a cruel world. Reliable data the availability data and the consistency of data is important to make them ahead from their competitor because this 3 word would affect the decision making of top managerial and to plan in a future by estimating what would happen from the figure they have.

- Data replication also give advantages whereby the data would be replicated to a multiple node and in case something happen such as disaster the important data can still be recovery and can avoid from bigger loss then they already had, beside that the updated data will be always available since all node will receive same data therefore the information received is reliable and guarantee to be as same as it central node.

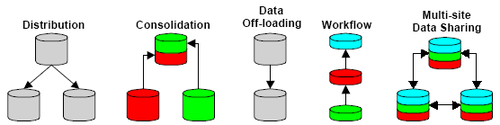

- There is many technique used in data replication, because if the replication is not manage in a good manner it could loading the network and make the network become slow and perhaps would cause lost connection with others nodes this is because the data is being copied among itself within one network. There is many technique used in data replication today mostly done synchronize or asynchronize between its nodes. The common architectures used in data replication is Distribution, Consolidation, Data off-loading, workflow and Multi-site data sharing this architectures were implement in Oracle 7 replication.

- Distribution. A typical example is that of a pricing list maintained by a company's headquarters and distributed to field offices. Usually the primary owner of this kind of information is the headquarters, and field offices use the information in a read-only manner.

- Consolidation. Information on sales figures may be owned, entered and maintained at the local sales office level. This data may also be partitioned so that each office only sees data related to their own sales. A headquarters office may require full read-only access to sales from all offices in order to make forecasts or to anticipate demand. Information Off-loading. In some companies, it may be desirable to reduce processing conflicts (in terms of system resources) between data entry activity and data analysis activity. An example may be an accounting system with a high transaction rate that also serves as a management reporting system. With replication, the data could be owned and maintained by the transaction system and replicated to another database location that could be specially tailored for read-only management reporting. Disaster Site Maintenance. With full data replication, it becomes possible to maintain an accessible backup site for database operations. The “backup” site is fully accessible Strategies and Techniques for Using Oracle7 Replication 2 Part A34042 and can be used to test read-only functions, or provide extra processing capability to deal with sporadic user demands. In the event of a system failure, the backup location will queue up changes to the failed system and automatically forward them after recovery is complete. All of the prior examples employ a static, exclusive ownership model (often referred to as primary site ownership). An example of exclusive ownership where the data owner may change over time is best illustrated with a work flow example. This example illustrates dynamic exclusive ownership.

- Work flow. A work flow model illustrates the concept of dynamic exclusive ownership by passing ownership of data from database location to database location within a replicated environment. An example is a combined order entry and shipping system that places orders and assigns ownership based on the “state” of an order (entered, approved, picked, shipped, etc.). Another example is an employee expenses reimbursement system in which expenses are routed to people and departments as part of an approval process.A replication work flow environment is extremely fault tolerant, allowing different functions along a flow to proceed independently in the event of failure at locations dedicated to other functions. All of the above models assume exclusive ownership of data. However, there are several applications that can benefit from some kind of shared ownership, update-anywhere model.

- Multi-site Data Sharing. Some applications have a business practice that allows multiple locations and users to update or change information related to a single piece of data. Examples might include reservation information (at a front desk or airline counter and via a central phone number) or any data being worked on by a team (such as a problem report being worked on by a team of support analysts and technicians, or a global sales licensing deal with sales representatives from many countries adding terms and conditions). Shared ownership models must be aware of the possibility for conflicting simultaneous access that may result in data inconsistency (data conflicts). Sophisticated replication environments must be able to handle conflicts as they occur, and they should provide a declarative way to describe the methods needed to resolve them. Understanding the business rules around how to handle simultaneous conflicting access to data is crucial to any shared ownership implementation. Oracle’s replication options can enable the implementation of all of these scenarios.

α β γ δ ε ζ η θ ι κ λ μ ν ξ ο π ρ σ ς τ υ φ χ ψ ω Γ Δ Θ Λ Ξ Π Σ Φ Ψ Ω

Replication Schema

Trifon used JMS(Java Message Service) method to deliver the process of the replication, the concept of this method is it the data is send via message in the XML file form. Before that The basic concept of this scheme as shown below:

Local Producer --> to Local JMS -> Remote JMS Server -> Local Consumer

- Central Node to Remote Node

- The master data is create on the central known as Local Producer.

- When the master data is created and replication is about to be processed the master data then will be export first using the export processor and this data will be transform into XML file

- Then this XML file will be send and store in JMS local server

- After the file has been stored this file will be send to the Remote JMS Server(Client JMS)

- This file will be process using the import processor before it master data is replicated into the remote node

- Remote Node to Central Node

- Data is create on the remote but this time this remote node will be Local Producer.

- When the data is created and replication is about to be processed the data then will be export using the export processor at the remote node.

- Then XML file created and will be send and store in JMS local server(Remote Node)

- After the file has been stored this file will be send to the Remote JMS Server(Remote JMS)

- This file will be process using the import processor at the Central node before it data is replicated

- Remote to remote

- Data is create on the remote but this time this correspondent remote node will be Local Producer.

- When the data is created and replication is about to be processed the data then will be export using the export processor at the correspondent remote node.

- Then XML file created and will be send and store in JMS local server(Correspondent Remote Node)

- After the file has been stored this file will be send to the Remote JMS Server(Remote JMS)

- This file will be process using the import processor at the other remote node before it data is replicated

As we can se before the data is send or receive among the nodes, the data will be store first, so this if something happen in the middle of the connection between this nodes such connection lost, the data could be recover back as soon the connection is online again.

Beside that the data also can recieved the exact data as the data send by the sender. Therefore the data is reliable and can be update regularly either from remote nodes or central node, therefore the problem of redundency or corrupted data will be overcome the top managerial could do their job and make decision while other staff input the data the only error should be concern of is from human factor itself. Beside that it also convenient from using the importLoader from Adempiere.Because when we used the importloader it only would create a file but we need to distribute manually to other nodes the import back to the server everything is done manually, as we human sometimes we forget to do so or sometime we are to lazy to do that :).Therefore it this could save time and the work become faster and more convinent.

My Contact

- mizi

- headpeac3@yahoo.com <== email/IM